Will The Real AI Please Stand Up?

Three things that get called “AI” and the challenges and impacts of each

The term “Artificial Intelligence” (AI) has become an overutilized buzzword that it seems anyone will slap onto anything these days. Beyond the buzzy bits, AI has morphed into an umbrella term that covers a wide range of technologies that may or may not actually *be* AI. If it’s a computer doing things that help make work go faster or easier or better, it tends to get the trendy moniker. Understanding what AI really is can be confusing. AI technologies vary significantly in complexity, capabilities, and underlying mechanisms. Some are highly specialized systems that excel in narrow tasks, while others aim to mimic human intelligence- a feat that remains a theoretical exercise.

Under this umbrella are three distinct categories: Artificial General Intelligence (AGI), Large Language Models (LLMs, more commonly referred to by the public as Generative AI), and automated software tools. AGI represents the ambitious goal of creating machines that can think and reason across diverse fields just as humans do. While AGI remains theoretical, Large Language Models like ChatGPT, are already transforming industries by processing and generating plausible-enough human-like text, but they have very clear limitations in understanding things beyond their training. On the more pragmatic side, automated software tools (while less mind-blowing) play an essential role in streamlining repetitive tasks, decision-making, and data analysis. And those tools have been around for years even before the now-ubiquitous AI label.

Each of these versions of AI come with their own set of capabilities and challenges. AGI promises a leap into a future where machines could rival or surpass human intelligence. LLMs offer more immediate, practical applications, though they still operate within confined settings. Automated tools, though often overlooked, continue to revolutionize business processes by helping humans be more productive and efficient no matter their industry. Let’s take a look at the nuances of each- examining their impacts, limitations, and what they mean for you.

General Intelligence AI

- Deep Learning – a method in artificial intelligence (AI) that teaches computers to process data in a way that is inspired by the human brain.

- Neural Network – a computer system modeled on the human brain and nervous system.

- Singularity – is a hypothetical future point in time at which technological growth becomes uncontrollable and irreversible, resulting in unforeseeable consequences for human civilization.

- Quantum Computing – computing that makes use of the quantum states of subatomic particles to store information.

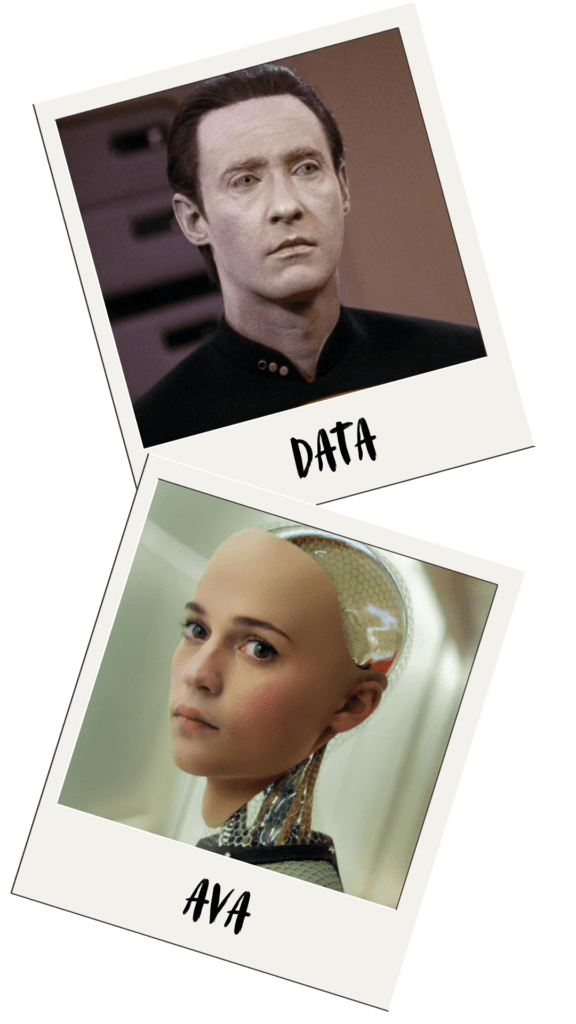

The idea of Artificial General Intelligence (AGI) is a machine with the ability to understand, learn, and apply knowledge across an unlimited range of tasks, much like our human meat bag brains. The promise of AGI lies in its potential to reason, solve complex problems, and adapt to new situations without needing human input or intervention. This is far beyond the territory of algorithms. The most ambitious of predictions are for a machine capable of thinking creatively, making decisions, and even experiencing emotions or consciousness. Think Star Trek’s beloved Data, played by Brett Spiner or Ava in Ex Machina, played by Alicia Vikander.

While futurists and researchers are passionate about the pursuit of human-like machines, the reality is that replicating the depth and flexibility of human cognition is extraordinarily complex. Our minds are capable of subtle reasoning, emotional intelligence, and abstract thinking – all qualities that current AI systems cannot begin to match.

So-called “Godfather of AI” Geoffrey Hinton says that AGI could happen in 20 years, but people have been saying this for a LONG time. Back in 1967, Marvin Minskey (former head of the MIT AI lab) that general AI would be substantially solved “within a generation.” It still isn’t here.

Despite rapid advancements and aggressive predictions, nothing currently comes close to the adaptability and generalization that AGI would require. Cracking this nut is not simply a matter of more data or better algorithms; AGI would demand breakthroughs in understanding human consciousness, intuition, and creativity- areas where we are still in the dark.

This author, for one, is very grateful that this type of AI does not exist since matching or surpassing human intelligence brings a whole host of ethical, moral, and existential risks that humanity has continuously shown we’re unprepared to manage.

For now, all existing AI systems are designed to excel at specific, human-defined tasks. While this can include everything from playing chess to diagnosing diseases, they are unable to apply their knowledge outside their programmed function. As much as we talk about AI transforming industries and society, they’re simply machines that operate within very defined limits, and do not work without human input.

Large Language Models (LLMs)

- Algorithm – a set of rules that a machine can follow to learn how to do a task.

- Corpus – a large dataset of written or spoken material that can be used to train a machine to perform linguistic tasks.

- Data Mining – the process of analyzing datasets in order to discover new patterns that might improve the model.

- Hallucination – refers to outputs that deviate significantly from real data or expected oucomes.

- Machine Learning – a branch of artificial intelligence (AI) and computer science that focuses on the using data and algorithms to enable AI to imitate the way that humans learn, gradually improving its accuracy.

- Natural Language Processing – a subfield of computer science and artificial intelligence (AI) that uses machine learning to enable computers to understand and communicate with human language.

- Weak AI (also called Narrow AI) – is a type of artificial intelligence that is limited to a specific or narrow area.

LLMs (also called Generative AI) like ChatGPT are AI systems trained on enormous amounts of text data so that they can understand and generate plausible sounding human-like responses. While these models excel in Natural Language Processing (NLP) tasks such as answering questions, summarizing content, and creating conversational chat bots, they cannot adapt to new contexts without additional training.

How do they work?

LLMs are built on advanced machine learning algorithms and trained on vast datasets containing text from books, articles, websites, and other sources. These models work by analyzing patterns in the data to understand context and predict the most likely next word or phrase in a sentence. Through this process, LLMs can generate responses that appear highly intelligent. However, it’s all a ruse since these models are limited by the data they’ve been trained on and cannot adapt without additional retraining or fine-tuning on new data. Moreover, the technology aims for plausible sounding answers, not correct ones.

What are the challenges?

1. AI works to sound plausible, not be “correct.”

One of the fundamental limitations of LLMs is that while they can generate responses that may be deemed “good” or “bad” based on metrics like grammar, fluency, and relevance, they don’t understand why a response is good or bad. This is because LLMs operate based on statistical patterns rather than genuine comprehension. For example, they might generate an insightful response to a question about quantum mechanics, but they don’t truly understand the concepts. They are simply predicting the most likely correct phrases based on their training – they’re trying to sound plausible enough. When output is evaluated, it’s judged by how closely it aligns with human expectations, but the model itself has no concept of whether its response is factually correct, logically sound, or ethically appropriate.

Because of this, AI can go off the rails sometimes manifesting in incorrect predictions, misinformation, misleading advice, false positives, false negatives, made up historical facts and downright bizarre interactions which can be both terrifying and hilarious. In fact, a research paper from 2023 found that 47% of ChatGPT’s references were fabricated. 100% made up. This is such a huge problem across the board that Microsoft is already working on a separate service that reviews LLM content and “corrects” hallucinations.

2. AI is a black box.

Another issue with LLMs is the “black box” problem. Even though AI developers built and trained them, the developers themselves don’t fully understand how the models arrive at specific outputs. Although we can track the algorithms and training data used to build LLMs, the internal decision-making processes that occur when they generate responses are highly complex and difficult to interpret. This lack of interpretability presents ethical and practical concerns, particularly in critical areas like healthcare, legal decisions, or any system where understanding the reasoning behind AI’s actions is crucial for accountability, trust, and safety. Addressing the black box problem is an ongoing challenge in AI development, with researchers seeking ways to make AI models more transparent and interpretable without sacrificing their performance.

3. AI can eat it’s own tail.

Consider also now that LLMs are being used by 53% of Americans (as surveyed by Adobe. Here are more stats focused on business usage.) for online content creation, email generation, and more – when will we run out of fresh, 100% human generated content to train LLMs on? When will we be in danger of trashing the whole system because we’re entered a “recursive learning” phase, a “self-consuming training loop” effectively training an LLM on its own outputs. Since LLM outputs still largely need human review and revision, we’re on the horizon of a garbage in, garbage out scenario rendering them useless. And we all know how bad the quality is when you Xerox a Xerox that’s been Xeroxed. (Or, I suppose a different metaphor for our younger audience: when your grandfather gives you a printed page of a cell phone photo of an original photograph…). Anyway, you get the idea- every new iteration is that much worse than the previous version.

- AI Inbreeding – inbreeding refers to the production of offspring from genetically similar members of a population thus leading to genomic …

- Model Autophagy Disorder (MAD) – derived from the Greek meaning “self-devouring,” aptly describes models consuming their own outputs as training data. The phenomenon reveals how unchecked AI risks become detached from human needs through recursive self-feeding,

- Model Collapse – is researchers’ name for what happens to generative AI models when they’re trained using data produced by other AIs rather than human beings.

How do we deploy AI in a safe way?

While LLMs are powerful tools for automating language-related tasks, their inability to understand context and the black-box nature of their inner workings demand continued human oversight. It’s okay to use LLMs to help your employees to be more expeditious but it should be utilized within a strategy where it is okay to have errors. Here are some of Vertical’s takeaways on how to utilize AI effectively while lowering your risk:

1. Don’t have your AI interact with humans unattended.

Bard, Google’s AI chatbot, made a factual error in its first demo back in 2023. As we discuss in Understanding AI Part 1 it’s not just alarming that a chatbot can publish factual errors with such blatant confidence. It’s the fact that it feels trustworthy that’s the bigger problem. As a general rule, we recommend not putting LLMs in direct contact with your customers. Keep a human in the loop who is knowledgeable and can detect suspect outputs.

2. Do use AI for monitoring and alerting supervisors.

3. Do use AI for doing initial reporting analysis before passing to a capable employee for review.

4. Do use it to assist agents.

AI is great at finding information relevant to a conversation within the company databases (KBs, customer records, etc.) in real-time so the agent doesn’t have to look it up, wasting precious time. AI can also transcribe calls or make notes about the conversation so the agent doesn’t have to (though, they should still review it).

5. Do train AI based chatbots on your organizations data, not a general training data set.

AI-based chatbots can be used effectively if they are given a very tight and specific lane. They can be great for tasks like password resets, providing canned information like schedules, etc. They should be trained and focused at your organization’s data, not a general training data set. If you keep their focus narrow and give it only as much as it needs to be successful and accurate, AI chatbots can be a great way for customers and constituents to self-serve therefore lightening the load for your human agents to handle more complex and nuanced situations.

6. Do plan to monitor your LLM AI tools regularly.

It’s also worth nothing that the “set it and forget it” mindset does not apply to these types of AI tools. “If not properly monitored over time, even the most well-trained, unbiased AI model can “drift” from its original parameters and produce unwanted results when deployed. Drift detection is a core component of strong AI governance.” (IBM) They require continuous oversight, supervision, and periodic updates to ensure they are performing optimally and delivering expected results. Changes in data inputs, business rules, or external conditions can disrupt automated systems, leading to errors, inefficiencies, or even critical failures if not caught in time. A data analysis tool might generate misleading reports if fed outdated or incomplete information; an automated decision-making tool could miss key variables without regular adjustments. Regular audits, performance monitoring, and human oversight are essential to ensure these tools remain effective and aligned with business goals. While these tools can be exciting, they won’t be “taking our jobs” (as so many were fearful of at the beginning), but rather adding another level of work for us. Human oversight is required both at the point of output and to maintain working order.

It shouldn’t be news, though, that humans need to be involved in the ongoing care and feeding (so to speak) of our tools – cars, homes, pianos, and phones require the occasional look-see, cleaning, maintenance, or replacement.

Costs

While LLMs appear to be a low-cost solution for many tasks, it’s only because the companies offering them are not setting pricing based on their costs; they are offering unnaturally low pricing to get customers in the door. A common tech industry tactic used with most new and exciting technologies – Build the market, develop a need, then (hopefully) use the scale to deal with the real costs of delivery.

While there are premium accounts and paid software, right now all it takes for the average person to get access to ChatGPT, for example, is the time it takes to set up a free account, but AI is insanely expensive. Companies are spending huge amounts of money on infrastructure, electricity and cooling. Data centers cost a ton and eventually, companies will stop racing to win the market and investors will want to see a profit. At that moment, AI will become really expensive, because it already is. The AI companies just aren’t passing the cost on to customers…yet.

And the costs of AI extend far beyond the initial development of algorithms and software – there are significant infrastructure and environmental impacts associated with running these systems. One major factor is the reliance on massive data centers to train and operate AI models, especially resource-intensive ones like Large Language Models (LLMs). These data centers require enormous amounts of energy to power thousands of high-performance servers that run 24/7, processing vast amounts of data. This energy usage not only contributes to high operational costs but also has environmental consequences, as many data centers rely on non-renewable energy sources, contributing to carbon emissions. The computational power needed to train large AI models, such as GPT or other advanced systems, can consume as much electricity as hundreds of households over several weeks or months.

In addition, as someone who lives in Northern Virginia – home to the largest concentration of data centers in the world – this author is acutely aware of the physical presence that all these cloud tools require. (And man, are they loud.)

Another often overlooked cost is water usage. The Washington Post recently illustrated just how much water these data centers need to keep these systems cool. In areas with water scarcity, this can exacerbate local environmental stresses. For instance, some large AI data centers use millions of gallons of water annually just for cooling.

While AI promises great efficiencies in many areas, the costs of maintaining the infrastructure required to run AI systems at scale pose not just huge future cost to the end-user but ethical and environmental challenges that need to be addressed before they become more of a problem. [This just in at the time of writing: A sneek peak at the cost of AI; OpenAI seeks $150B in startup funds.]

Automated Software Tools

Automated software tools are a key part of all the things labeled AI. They’re designed to handle task-oriented functions such as data analysis, simple decision making, and process automation. But these tools aren’t new – businesses have been using forms of automation for years to streamline workflows and reduce the need for human intervention in repetitive tasks, freeing up humans for more complex work. Vertical has been offering communications solutions layered with this type of AI technology for years. Slapping the AI label on these tools is a marketing attempt to sound more important, relevant, and must-have than it is.

Back in the early 1600s Netherlands, the price of tulips skyrocketed and then dramatically collapsed. Tulip Mania is now used to metaphorically “refer to any large economic bubble when asset prices deviate from intrinsic values.” Taking a slight leap, this author uses it as a reminder to look at the actual value something has for your company and not the value others have placed on a thing.

Tools in the Automated Software category include everything from scheduling algorithms and chatbots as well as more complex data processing systems used in industries like finance, healthcare, and manufacturing. While not glamourous, this is the future that was promised to us (though we’re still waiting for those jetpacks). These “robots” can improve our efficiency and productivity by handing time consuming tasks like scheduling meetings, sorting emails, running standard reports, or summarizing information, theoretically freeing us up for more strategic and high-level activities.

These tools, however, are only effective within very specific defined contexts. They operate by following strict rules and parameters and while they may seem sophisticated, they can’t “learn” or adapt beyond their programming. For example, a customer service chatbot might efficiently handle frequently asked questions by providing scripted responses based on keywords. But if a customer poses a question outside the predefined set of queries or uses unusual phrasing, the chatbot will struggle to offer a meaningful response. It won’t know how to respond without being reprogrammed to handle the new input, requiring human intervention to adjust the system and expand its capabilities. This limitation makes it essential to provide ongoing maintenance and updates to keep the system functional and relevant as customer needs evolve.

Keep humans in the loop with your AI so they can detect suspect outputs or programming that needs updates.

If automated tools are chosen to be a part of an organization’s functioning, businesses need to invest in the routine supervision of them to handle unexpected issues or scenarios where the tools might fail. Automated software is excellent for handling predictable rule-based tasks but when they encounter unfamiliar situations, they may either freeze or make incorrect decisions. Human involvement and oversight can mitigate or prevent these scenarios. A blend of automation with human involvement and oversight can help maintain a balance between efficiency and reliability ensuring these AI-driven tools continue to serve their intended purpose effectively.

Conclusion

We seem to be living in the wild west of AI – everyone throwing the term around with excitement and fear of what AI is now and what it could be in the future. As AI evolves, understanding the distinctions between these types of systems is critical for businesses and individuals in order to leverage the right tools for their specific needs. As you continue your journey in a world where AI is seemingly everywhere, keep these 4 things in mind:

1. Remember the tulips.

When making decisions about AI or any other new thing for your organization, remember the Tulip Mania. Keep in mind the value it might have for you and your company NOT the value a vendor tells you it should have.

2. Always proceed with intention.

Don’t use flashy tech for the sake of flashy tech; make sure the things you implement serve your needs in the right way. Take pragmatic and strategic steps with technology that ensures it serves your company’s mission or vision.

3. Consider future costs

AI is cheap now because companies are trying to grow demand. But your costs could be exponentially higher in the future if the whole system hasn’t collapsed on itself by then. Save yourself potential trouble by forecasting the higher costs and what your business plan. If your business heavily adopts AI tools, your cost may dramatically increase.

4. Vertical Experts are here to help you.

Looking for assistance implementing AI? Vertical can help you determine the helpful uses of AI for your business or organization. Our experts can assist you set up AI within your larger customer experience strategy. Reach out today and one of our Experts will be in touch soon: